Unable to login NetCloud machine from CLI |

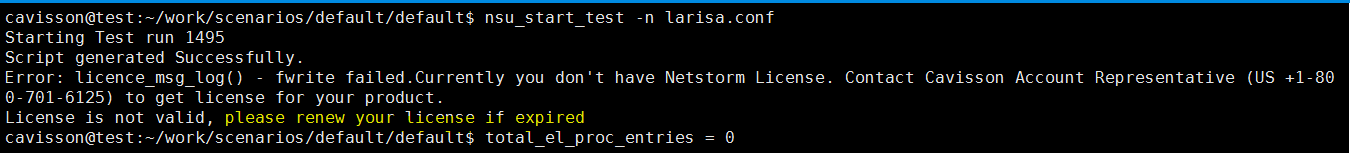

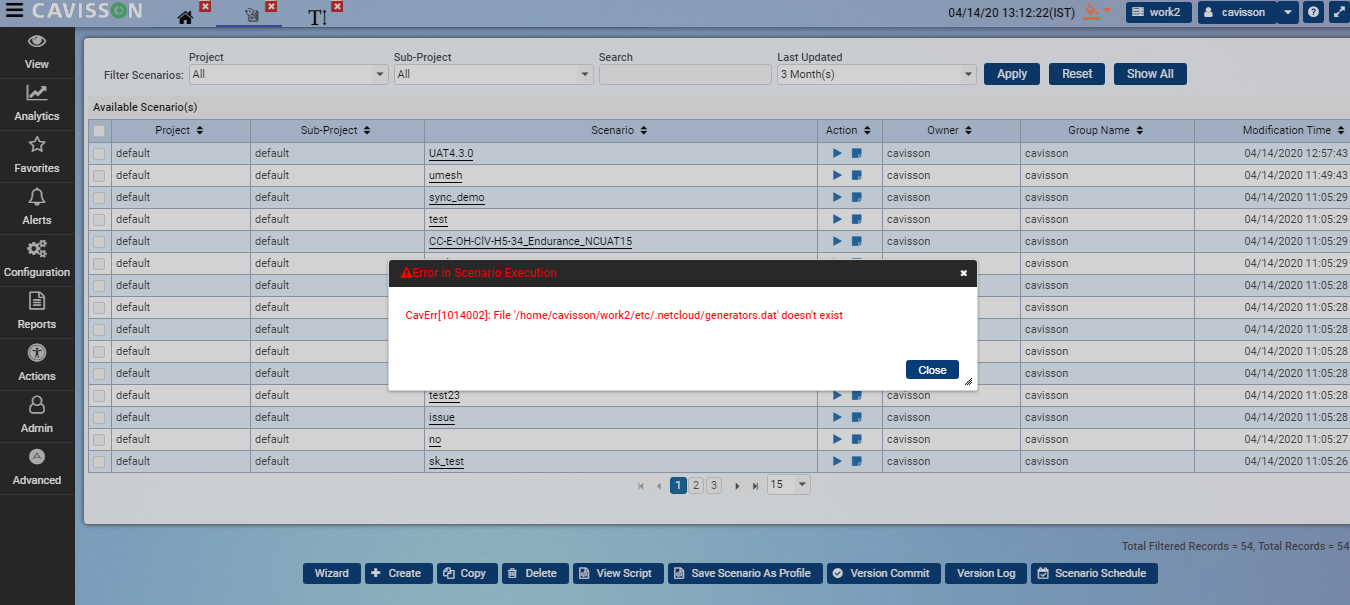

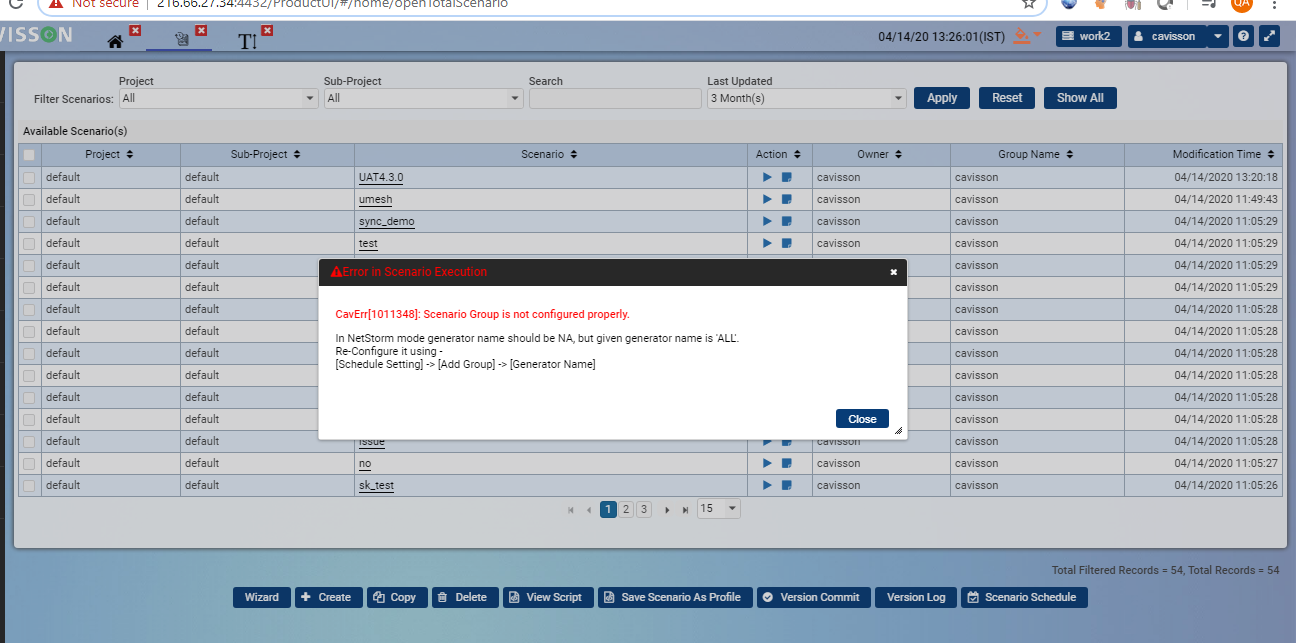

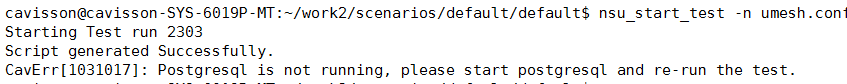

Unable to start NetCloud test. Getting error. |

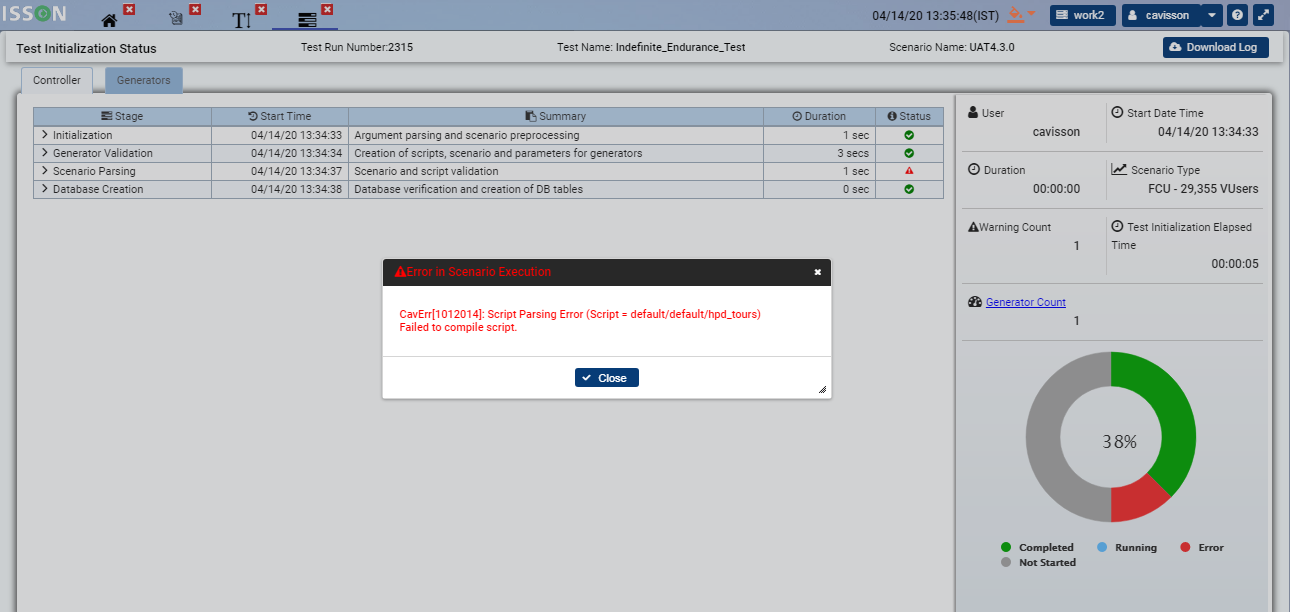

| Possible Reasons #6 | Script is not compiled.  |

| Steps to Diagnose | InitScreen will show an error regarding script |

| Solution | Correct script from script manager. |

| Possible Reasons #7 | PostgreSQL service is not running on controller.  |

| Steps to Diagnose | Check using ps command |

| Command Used | ps -ef |grep postgresql |

| Solution | start postgresql service |

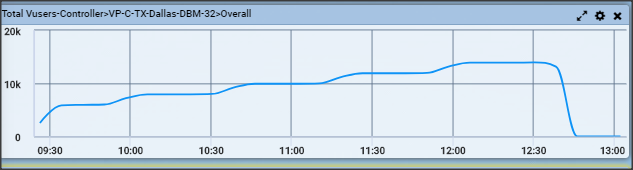

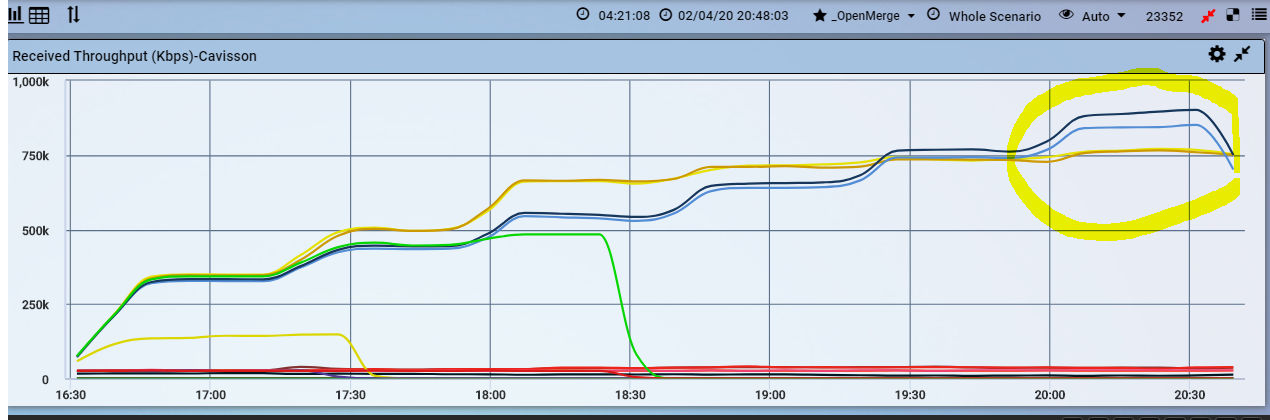

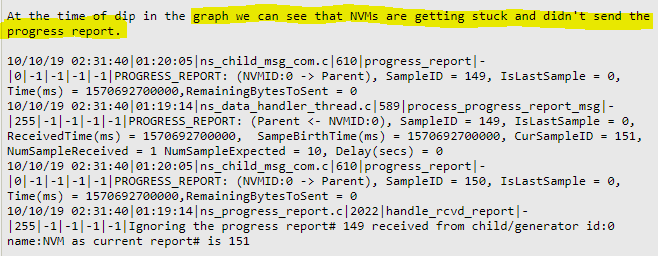

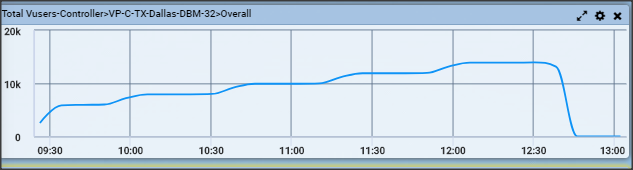

Users went down |

| Possible Reasons #1 | 1) Test stopped on few/all generators.

2) Some CVMs got killed on generators. |

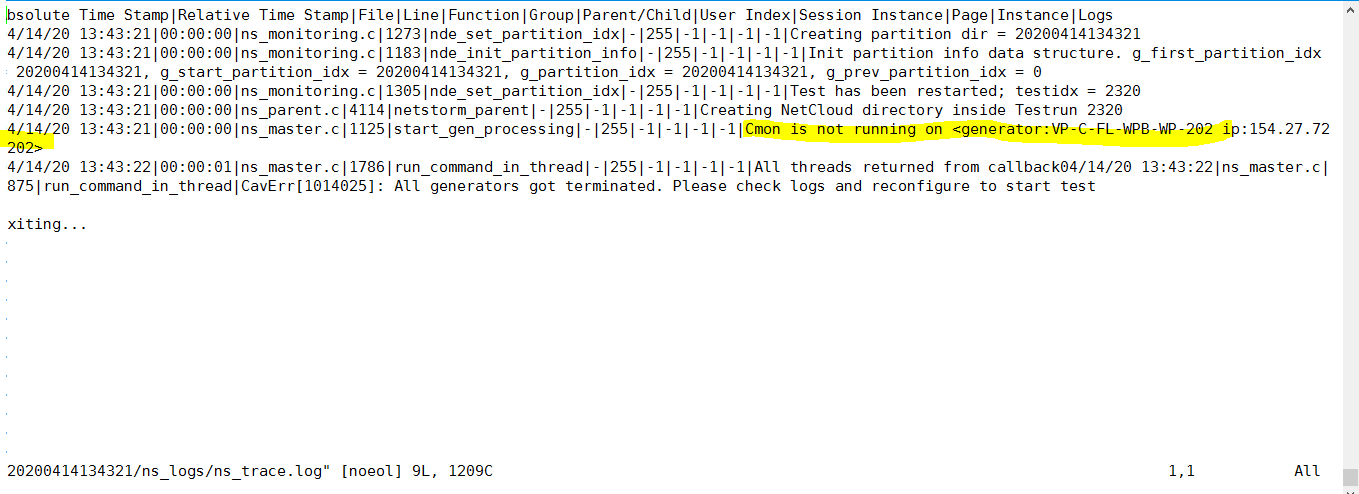

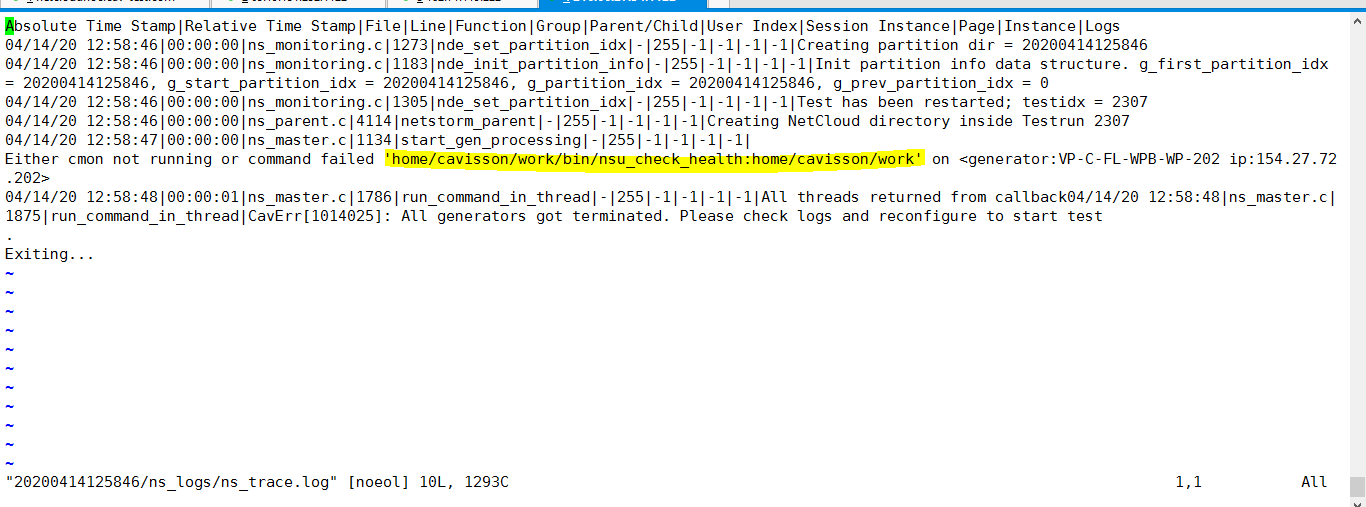

| Steps to Diagnose | Check below logs 1) $NS_WDIR/logs/TRXX/partition/ns_logs/ns_trace.log 2) $NS_WDIR/logs/TRXX/TestRunOutput.log Test stopped or CVMs killed due to core dump on code function or system kernel. Check dmesg -T for segfault. Also check core file at /home/cavisson/core_files. Check backtrace using gdb and analyse frames where dump created. |

| Command Used | vi

1) $NS_WDIR/logs/TRXX/partition/ns_logs/ns_trace.log 2) $NS_WDIR/logs/TRXX/TestRunOutput.log |

| Solution | Contact cavisson product team for code fix. |

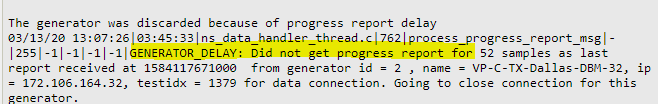

Generators got discarded |

| Possible Reasons #4 | Old or Bad kernel on Generator Machine |

| Steps to Diagnose | Check kernel on generator using linux command uname -r. |

| Command Used | uname -r |

| Solution | Upgrade latest kernel. |

Getting 100% failure on generators |

| Possible Reasons #2 | G_TRACING keyword is not enabled in the scenario |

| Steps to Diagnose | Check scenario. |

| Command Used | Check KeywordDefination.dat file at $NS_WDIR/etc |

| Solution | Use right Keyword in scenario |

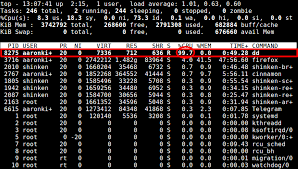

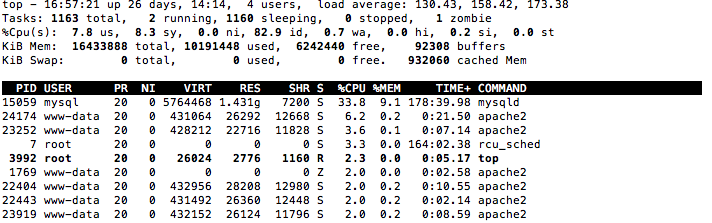

CPU utilization is high |

Load Average is High |

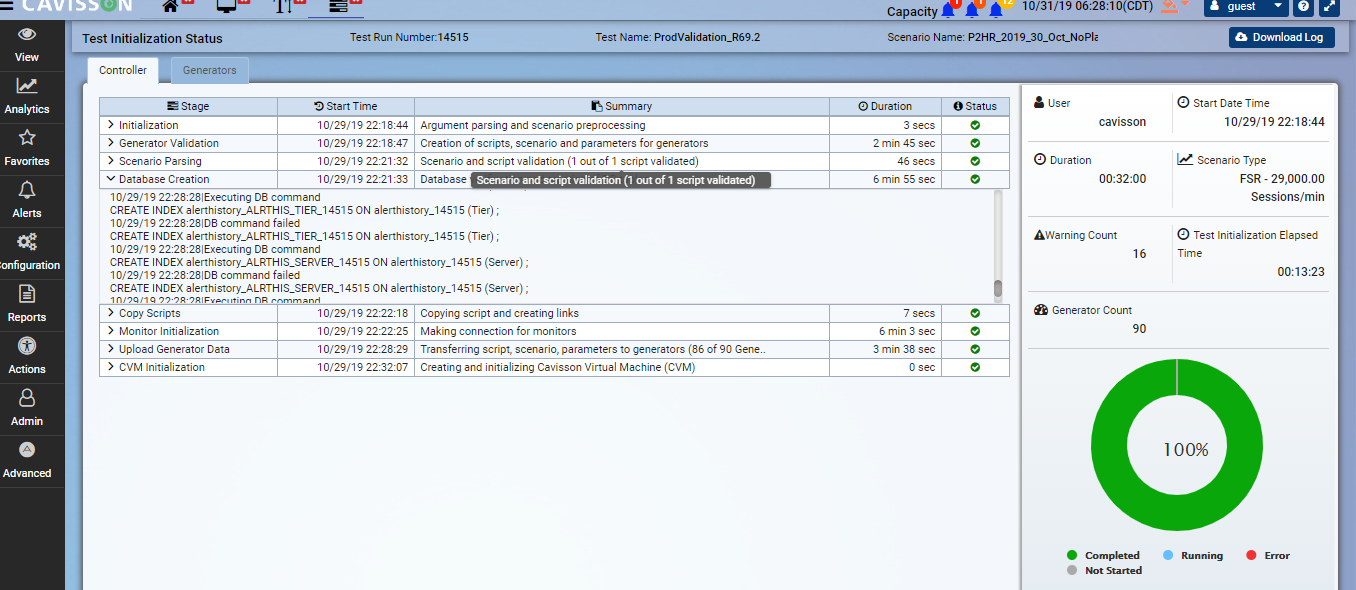

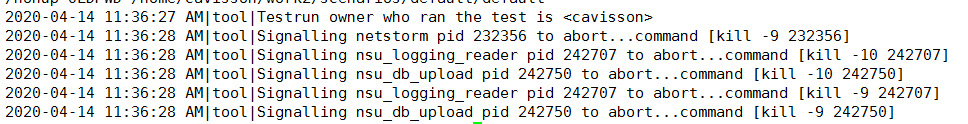

NetCloud test stuck on database creation |

| Possible Reasons #2

Possible Reasons #3 | 1.) Sometimes nsu_db_upload process running of older testruns those are not running currently.

2.) Sometimes older nia_file_aggrigator process are running |

| Steps to Diagnose | 1) Check using ps -ef |grep nsu_db_upload.

2) Check any test running with corresponding process using nsu_show_all_netstorm |

| Commands to validate | 1) ps -ef |grep nsu_db_upload.

2) nsu_show_all_netstorm 3) kill -9 pid |

| Solution | Stop these older process by killing them |

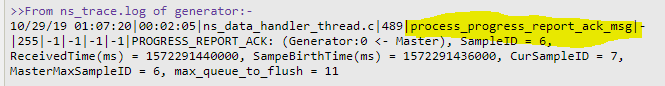

NetCloud test fails in middle of test |

| Possible Reason #1

Possible Reason #2 | 1.) This may happen due to core dump on controller due to some fault in code or due to system kernel

2.) This may happen due to NVMs failure with core dump on failed generators |

| Steps to Diagnose | Check below logs 1) $NS_WDIR/logs/TRXX/partition/ns_logs/ns_trace.log 2) $NS_WDIR/logs/TRXX/TestRunOutput.log Test stopped or CVMs killed due to core dump on code function or system kernel. Check dmesg -T for segfault. Also check core file at /home/cavisson/core_files. Check backtrace using gdb and analyse frames where dump created. |

| Commands to validate | gdb |

| Solution | Contact cavisson client support team |

| Possible Reasons #3 | Not enough space left on controller/generators |

| Steps to Diagnose | 1) Check using df -h

2) Also can be check using nsu_sever_admin command |

| Commands to validate | 1) Df -h

2) nsu_server_admin -s ip -c “df -h” |

| Solution |

| Possible Reasons #4 | Some has stopped test forcefully  |

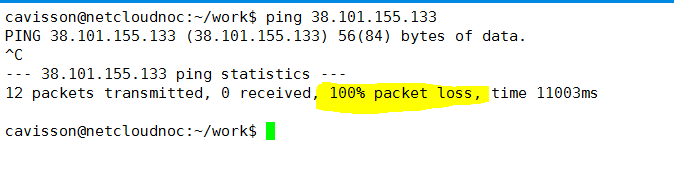

| Steps to Diagnose | Ping generator IP |

| Commands to validate | ping ip |

| Solution | contact CS team |

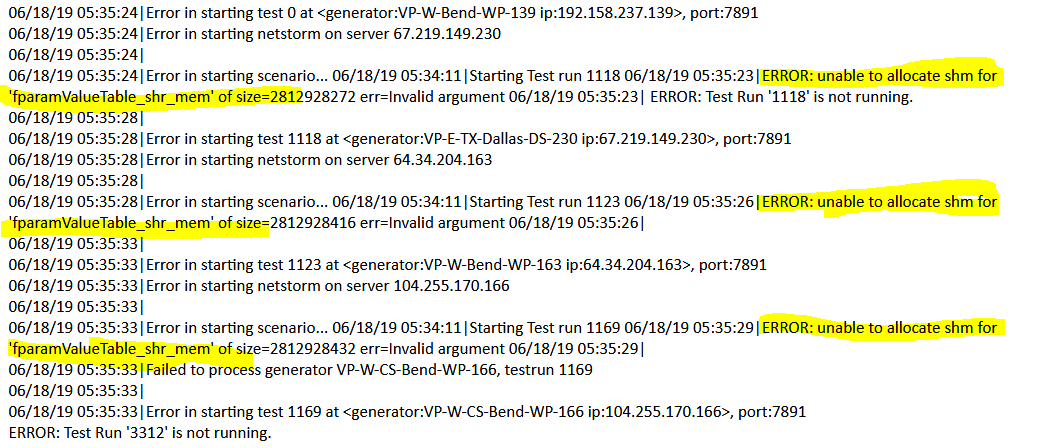

Not able to start test due to shared memory issue |

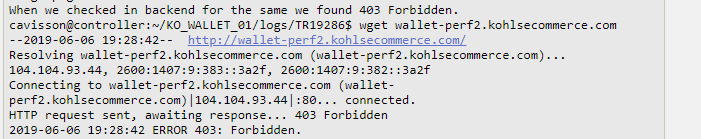

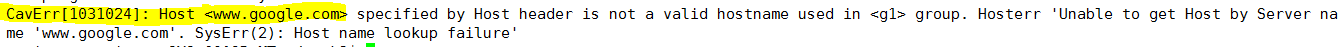

Unable to start test due to unknown host error |

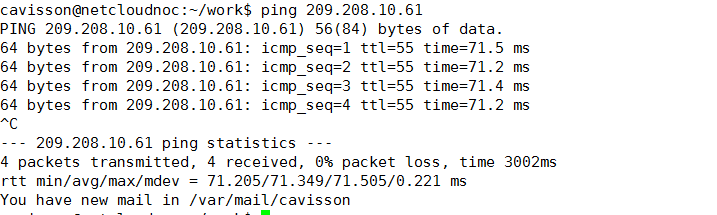

| Possible Reasons #2 | Due to host not reachable from source IP |

| Steps to Diagnose | Check host using ping command or using wget. |

| Commands to validate | 1) ping hostname

2) wget hostname |

| Solution | Get whitelist source ip to host application. |